Introduction

Modern graphics is very different from how it used to be. I spend my early years growing up, and later developing with OpenGL/WebGL. The essence of this type of graphics was the *state machine*; representing a GPU global state. The state was initialised through a series of commands, that were processed in order, and each one could select which part of the state to focus on, and update it. Once the state was correctly set-up you could ask the GPU to render the current buffers, and they would pass through a fixed function pipeline; a set-in-stone series of shader programs that went from the original vertices to the image on screen. As hardware improved, and GPUs became capable of processing multiple threads at once, it became clear that these ideas was old-fashioned. The limited capability meant that rendering was very linear; but the era of multi-processing, and custom GPU operations was being born. This meant that the linear system, could be turned into a rendering graph. A series of interconnected pipelines that the result of one could feed into the input of another. Suddenly, workloads could be parallelised, and more complicated effects could be achieved, more efficiently. This coupled with the advent of GPGPU meant that compute and graphics could be mixed and matched, and simulations and graphics went hand-in-hand. This guide will act as a guide to bring you from the old OpenGL way of thinking, right up to the modern era and ready to take advantage of all the power of modern GPUs and their pipelines.

WebGL

For this exposition, I will look at a simple example of WebGL online: https://github.com/mdn/dom-examples/tree/main/webgl-examples/tutorial/sample7. There are several files in there to look at: draw-scene.js, init-buffers.js, and webgl-demo.js. We will not go through all the code in detail, but give an idea of how WebGL works as a state machine.

Where to start ?

The first thing that we need for any rendering in the browser, be it WebGL or WebGPU, is a canvas. Just as a painter needs something to paint on, so do we! The canvas element is a HTML element that enables this, and you specify it with an id (so you can select it), and a width and height.

<canvas id="glcanvas" width="640" height="480"></canvas>You can then get the GL context, a handle to the graphics system, by calling getContext, and the context type:

const canvas = document.querySelector("#glcanvas");

const gl = canvas.getContext("webgl");The context allows you to access the state machine, and call commands on it to query and update the state. It is the context in which the state resides.

Modifying the state

In most cases, any command we call on the context object, will be modifying the state. Let’s take some examples. The simplest is the ability to set the background colour of the canvas:

gl.clearColor(0.0, 0.0, 0.0, 1.0);

gl.clear(gl.COLOR_BUFFER_BIT);Here you are doing two things; setting the clearColor in the state to RGBA(0,0,0,1), and then clearing the colour buffer to that colour; both are part of the state. As you can see, you don’t pass the colour RGBA values to the gl.clear call, you update it’s value in the state, and then request that the colour buffer be updated. There are no variables being passed around; the colour buffer is not an ArrayBuffer you have allocated, only to later update. The state encapsulates all these things, it contains the buffer itself, it contains the clearColor RGBA value. This is the essence of the state machine, and how it relates to the commands you pass.

Rendering

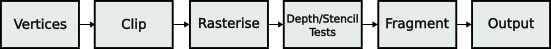

The main core of WebGL is built around a fixed function pipeline shown above. It takes the collection of vertices, connected together as triangles (or other polygons) and processes them for visualisation on the screen/canvas. Some parts of the pipeline such as Vertices and Fragment can be controlled through custom shaders, but overall the pipeline proceeds in much the same way for every render. The final output framebuffer which is populated from the fragment shader is the color buffer.

Framebuffer

For the WebGL rendering pipeline to work, WebGL has a framebuffer that consists of several different buffers. These represent the outputs of different stages of the pipeline, and ultimately the final result that is sent to the screen. For example, the depth buffer is used to store the result of the depth projection, and is used to cull fragments that are greater than a certain depth; or the stencil buffer that is used to mask fragments so that only part of the final color buffer is rendered. Sometimes the depth and stencil buffers are combined into a single Depth/Stencil buffer. Which buffers are present is controlled when creating the context:

const gl = canvas.getContext("webgl", depth: true, stencil: true });Shaders – Vertex and Fragment

There are two main shaders in WebGL; the vertex shader and the fragment shader. The vertex shader is used to translate model vertices to their position in homogenous coordinate space (through the familiar Model View Projection matrix). This is then projected into 2D space, and clip to the viewport. After this, the triangles are rasterized and using depth to make sure the triangles that are closest to the screen remain on top. The depth is then tested, and stencil applied to mark those fragments that we don’t need to compute. Finally, the fragment shader calculates the colour of a pixel (fragment) and places it in the color buffer. The colour of the pixel is determined by the fragment shader, and it is here that colour is computed from material properties and light sources. Something that is the essence of colour, the result of light reflected from a material.

The shaders are written in GLSL code, the vertex shader outputs the homogeneous coordinates through the special variable $gl_Position$:

gl_Position = uProjectionMatrix * uModelViewMatrix * aVertexPosition;The output of the fragment shader is the $gl_FragColor$ variable that decides the RGBA value of a particular pixel. For example,

gl_FragColor = vec4(texelColor.rgb * vLighting, texelColor.a);Information can be passed from the vertex shader to the fragment shader through interpolation. The use of so-called barycentric coordinates mean that variables on a per-vertex level can be interpolated across the face of a triangle, and passed to the fragment shader.

Scene composition

The final piece of the puzzle is the scene composition. Again, for WebGL, this proceeds through the use of commands to update the global state of the system. Attributes, such as position, texture coordinates are passed through commands that point to buffers in GPU memory:

gl.bindBuffer(gl.ARRAY_BUFFER, buffers.position);

gl.vertexAttribPointer(

programInfo.attribLocations.vertexPosition,

numComponents,

type,

normalize,

stride,

offset

);

gl.enableVertexAttribArray(programInfo.attribLocations.vertexPosition);These commands tell WebGL to bind to a buffer in memory, and then describe the layout of the binary data contained within. Parameters such as matrices (Model, View, Projection for example) are passed as uniform variables, and then bound to input parameters in the GLSL code.

gl.uniformMatrix4fv(

programInfo.uniformLocations.projectionMatrix,

false,

projectionMatrix

);And finally, after many steps and bindings, the WebGL system asks to draw the buffer;

gl.drawElements(gl.TRIANGLES, vertexCount, type, offset);And the pipeline begins to render to the color buffer, and then that is swapped (or blitted) on to the canvas by the browser.

Conclusion

,In this part we have given a very brief overview of the main parts of WebGL in an attempt to build a mental image of it. We have seen that we simply add a canvas element to our webpage, and that encapsulates the WebGL context. This is a global GPU state, that contains all the configuration parts and data that allows the pipeline to run. This pipeline is largely fixed, and allows for some control through shaders, and various commands that allow us to control the global state. This state, and buffers (such as vertices) are passed through the pipeline, processed through the different shaders, and rendered into the framebuffer in different forms; as depth, stencils and colours. The vertices representing the geometry and form of an object, and the fragments the colour from light and material reflectance. The final color buffer is then blit to canvas element, and the process repeats for every frame to create the output. This is, in a nutshell, the basic underlying process of WebGL. Having now internalized this mental model, we can now prepare ourselves to move into the world of WebGPU. A break from the rigidity of the WebGL, it will break us free to do more complex things, and have greater control over every part of the pipeline, and how it is constructed.

In the next part, WebGPU – Part 2: External Dependencies, we begin our journey into WebGPU by looking at external dependencies that we need to get started.